How to Deploy LLMs in Production: Strategies, Pitfalls, and Best Practices for Scalable AI

Deploying LLMs (Large Language Models) in production comes with its own set of complex challenges. From latency issues and compliance risks to hallucinations and infrastructure constraints, it’s a high-stakes environment that requires careful planning.

By reading this blog post, you will learn about LLM deployment challenges and how to overcome them, with strategies for infrastructure, automation, testing, and monitoring that help you scale with confidence and control.

Whether you’re scaling up an internal LLM tool or integrating one into your app, you’ll leave with actionable strategies that will aid you in reducing risks to ultimately improve your outcomes.

Define Success Before You Deploy: Business & Technical Goal Setting

Before diving into technical implementation, it’s important to be on the same page for what success looks like for your LLM deployment. This section outlines how to align business goals with technical benchmarks, so your team starts with clarity and purpose.

Clarify Business Use Cases and ROI Expectations

Start by defining your primary objective. Are you trying to reduce customer service wait times? Automate internal reports? Improve search results?

Set measurable ROI expectations early:

- Customer satisfaction: Use indicators such as CSAT (Customer Satisfaction) scores or NPS (Net Promoter Score) as benchmarks

- Efficiency: Time savings or cost reductions

- Conversion impact: Lead qualification or sales improvements

Align Technical Objectives with End-User Needs

Consider what a “good performance” means for your LLM application:

- Latency under 500ms?

- 99.99% uptime?

- Predictable responses under stress and information overload?

Setting these benchmarks early allows you to make infrastructure and model decisions based on real need, not guesswork.

Ready to define success metrics and goals for your AI deployment?

Our AI strategists in Ottawa can help you scope your first project. Get in touch!

Pre-Deployment Testing: Catch Pitfalls Before They Scale

Before launching into production, stress-testing your system is crucial. In this section, we explore the pre-deployment checks and staging practices that reveal critical flaws before they impact users.

Here is a typical and realistic scenario: A fintech company deploying a customer service chatbot used a mirrored staging environment to simulate production traffic spikes. During load testing, they discovered the model experienced 2–3 second latency spikes during concurrent user interactions. With this insight, they implemented output caching and fine-tuned the serving model, bringing average response times below 400ms before going live!

Accuracy, Latency & Load Testing in Staging Environments

Use a staging environment that mirrors your production stack. Test:

- Accuracy: Does it return correct and helpful responses?

- Latency: How fast are answers under different loads?

- Resilience: What happens during high concurrency?

Common Pitfalls: What Goes Wrong in Real-World Deployments

Watch out for these known issues:

- Cold starts: Models taking seconds to respond after inactivity

- Prompt brittleness: Output quality varies wildly between responses

- Security holes: Inadequate prompt sanitization

- Lack of observability: Poor logging and error visibility

- Version confusion: Hard to track which model is in use

Don’t just test the model—test the system.

Book a pre-deployment audit or AI readiness Assessment to test your model’s real-world readiness.

Choosing Your LLM Infrastructure: Cloud vs On-Prem

Your deployment environment shapes your costs, control, and scalability. This section compares cloud, on-premises, and hybrid setups so you can make informed infrastructure decisions.

Cloud Deployment Benefits and Trade-Offs

Pros:

- Scales quickly

- Easy to integrate with CI/CD

- Pay-as-you-go billing

Cons:

- Vendor lock-in

- Regulatory concerns

- Cost spikes under heavy use

On-Premises or Hybrid Solutions for Full Control

Use on-prem if:

- You handle sensitive or regulated data

- You want full control over performance tuning

Hybrid tip: Use cloud for R&D, then move mature models on-prem for stability and compliance.

Unsure which infrastructure is right for you? Let us guide your architecture decisions with a tailored cost and compliance assessment. Get in touch

Architecture & Automation: CI/CD for LLMs

Automation ensures reliable and repeatable model updates. This section explains how CI/CD pipelines, containerization, and model registries contribute to stable LLM operations.

Why Continuous Integration & Delivery Pipelines Matter

CI/CD pipelines:

- Reduce human error

- Improve version control

- Ensure consistent testing and deployment

Tools & Patterns for Reproducible LLM Workflows

- Git + MLflow: Track models and metadata

- Docker: Containerize your serving infrastructure

- Model registry: Log versions, performance, and rollout history

- Rollback plans: Always have a way to revert back to a previous model.

Need help setting up CI/CD for AI? We’ll help you integrate model registries, pipelines, and testing into your current stack. Contact Us.

Optimizing Performance: Speed, Cost & Efficiency

Efficiency is the name of the game. This section dives into how to tune your deployment for speed, responsiveness, and budget control without sacrificing quality.

| Model Type | Avg. Latency (ms) | Best Use Case |

| GPT-3.5 Turbo | 400–600 | High throughput chatbots, productivity apps |

| GPT-4 Turbo | 900–1,500 | Enterprise QA, advanced summarization, RAG |

| GPT-4o | 400–800 | Multimodal tasks (text, vision, audio), human-like assistants |

| Claude Opus 4 | 800–1,600 | Advanced coding, multi-step reasoning, AI agents, long-context |

| Claude Sonnet 4 | 500–1,200 | Balanced: workflows, RAG, robust coding, high-throughput chat |

| Claude Haiku | 300–700; | Fastest Claude: real-time UI, inexpensive high-traffic tasks |

| DistilBERT | <300 | Cost-sensitive, low-latency apps (e.g., mobile UIs) |

| Llama 4 (Meta) | 300–800 | On-prem LLMs, private fine-tuning, developer platforms |

| Mistral (Large / MixTRX) | 300–800 | Multilingual/multimodal tasks, open deployments, RAG |

| Gemini 2.5 (Google) | 400–900 | Research, enterprise copilots, high-throughput multimodal use |

Note: Latency depends on implementation details and usage volume.

Techniques to Reduce Latency and Improve Speed

- Prompt engineering: Reduce token count, make prompts deterministic

- Caching: Save previous outputs for reuse

- Model tuning: Fine-tune lighter models on specific tasks

Cost Control in Production LLM Environments

- Monitor token usage and set usage limits

- Use autoscaling on serverless endpoints

- Choose models with optimal size-to-performance ratio

Security, Compliance, and Privacy in LLM Deployments

LLMs introduce new threat surfaces. This section outlines proactive ways to keep your deployment secure and compliant with evolving data protection laws.

Protecting Against Prompt Injection and Unauthorized Access

- Sanitize all inputs

- Use guardrails or output filters

- Apply role-based access control

Regulatory Compliance – GDPR, HIPAA, and Beyond

- Encrypt sensitive data in transit and at rest

- Implement audit logs

- Use data governance policies that include model behaviour monitoring

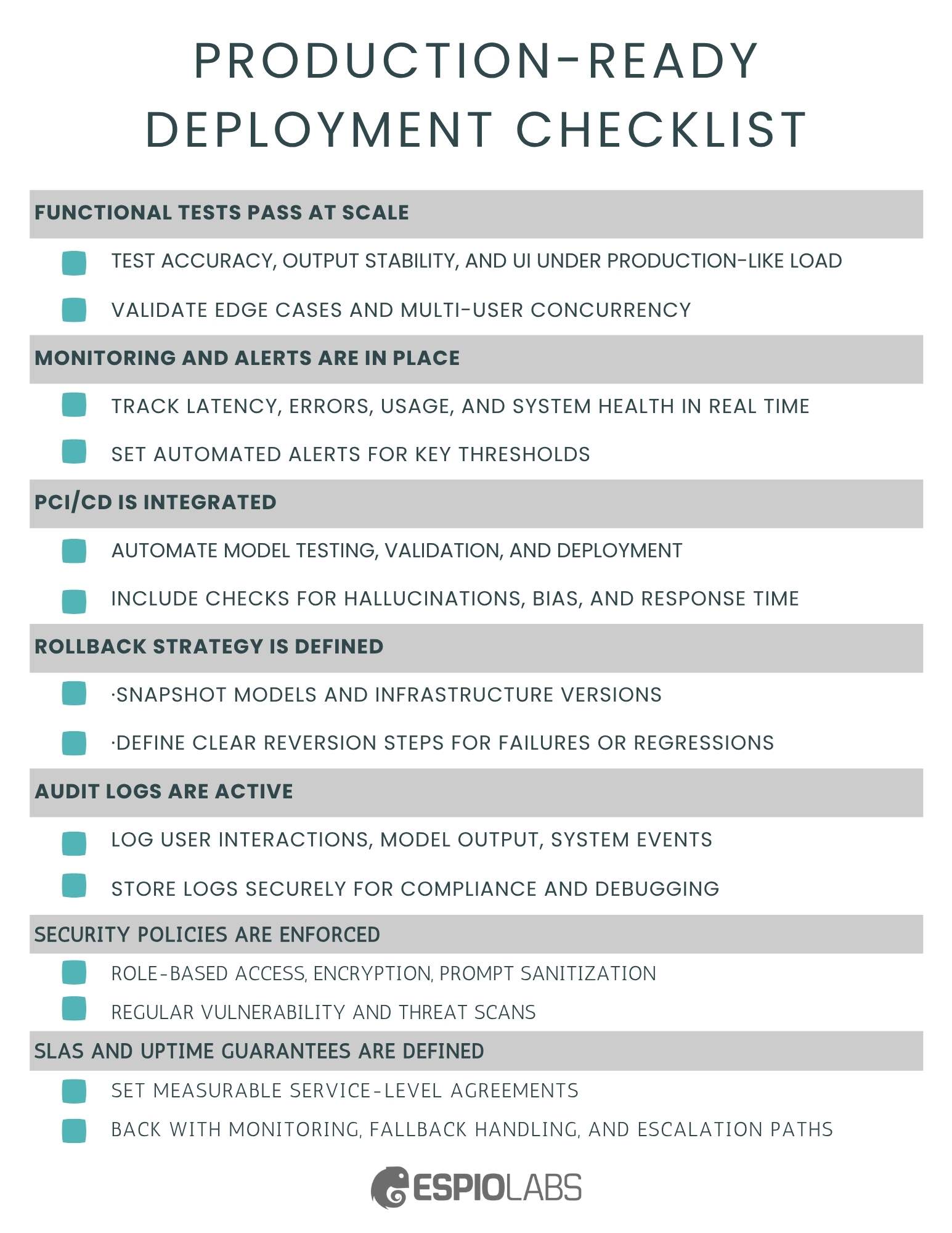

Production-Ready Deployment Checklist

What does “production-ready” really mean? This section presents a comprehensive checklist to ensure you’ve covered all technical, legal, and operational bases.

- Functional tests pass at scale

- Test accuracy, output stability, and UI under production-like load

- Validate edge cases and multi-user concurrency

- Monitoring and alerts are in place

- Track latency, errors, usage, and system health in real time

- Set automated alerts for key thresholds

- CI/CD is integrated

- Automate model testing, validation, and deployment

- Include checks for hallucinations, bias, and response time

- Rollback strategy is defined

- Snapshot models and infrastructure versions

- Define clear reversion steps for failures or regressions

- Activate Audit logs

- Log user interactions, model output, system events

- Store logs securely for compliance and debugging

- Security policies are enforced

- Role-based access, encryption, prompt sanitization

- Regular vulnerability and threat scans

- SLAs and uptime guarantees are defined

- Set measurable service-level agreements

- Back with monitoring, fallback handling, and escalation paths

Here is the checklist you can save for yourself:

If every item on this list is checked, your LLM deployment is ready for real-world deployment with fewer surprises and stronger safeguards in place.

Keep It Agile: Post-Deployment Monitoring & Iteration

Going live is just the beginning. This section explores how to continuously monitor, retrain, and iterate on your deployed LLM for long-term performance and value.

Continuous Monitoring and Error Reporting

- Use Grafana or OpenTelemetry to track latency, errors, and uptime

- Visualize drift in model performance

- Build dashboards for usage patterns and anomalies

Iterate on Feedback and Model Updates

- Fine-tune based on real inputs

- Use A/B testing for new model versions

- Maintain a change log and alerting protocol

Talk to us about post-deployment observability and long-term support options for your LLMs.

Your Next Move: Launch with Confidence

Deploying LLMs can be complex but with the right preparation or by working with it’s completely manageable. Define your success criteria early, automate your workflows, and stay vigilant on latency, performance, and privacy. A well-planned deployment means fewer surprises and more predictable impact.

Ready to make your deployment enterprise-grade?

Contact our data scientists and AI consultants in Ottawa to get started.